What is a program?

What is a program?

Here, for those who are trying programming for the first time,

I will explain the program overview.

A program is the most fundamental way to operate a computer.

In programs, what is commonly referred to as a programming language,

We operate computers using programming languages.

Many people know that programming languages are languages for computers, but

Even so, it's still difficult to understand the program.

This is because programming languages differ significantly from natural languages.

Natural language refers to the languages used by humans.

Essentially, understanding the difference between programming languages and natural languages is

It's often the first hurdle in understanding programming.

This chapter will explain the differences between the two languages through specific examples.

I will explain the program overview.

A program is the most fundamental way to operate a computer.

In programs, what is commonly referred to as a programming language,

We operate computers using programming languages.

Many people know that programming languages are languages for computers, but

Even so, it's still difficult to understand the program.

This is because programming languages differ significantly from natural languages.

Natural language refers to the languages used by humans.

Essentially, understanding the difference between programming languages and natural languages is

It's often the first hurdle in understanding programming.

This chapter will explain the differences between the two languages through specific examples.

Simple grammar

The first difference is that programming languages have simpler grammars than natural languages.

The grammar of a programming language is simply a defined order of words.

In this chapter, we will explain using an example of a hypothetical programming language.

For example, please see the following example.

This program means to display the word HELLO on the screen.

“Replacing the program with Japanese words, it looks like this.”

Now, Hello will be displayed on the screen.

It consists of only two words, but this is the grammar of a programming language.

Most parts of speech used in programming languages are verbs and objects.

In the previous program, REFLECT was the verb and the string HELLO was the object.

Bringing the verb first is because computers primarily developed in English-speaking countries.

The aforementioned program translates to the following in natural language.

Comparing these two, you can see the simplicity of the programming language's grammar.

People unfamiliar with programming languages may question the simple grammar.

However, as mentioned previously, programming languages are languages for controlling computers.

The minimum level of expressiveness needed to communicate instructions to a computer is sufficient.

Among those who saw the program earlier,

You may have noticed the phrase on screen doesn't appear in the program.

This is because when REFLECT is written, it is defined as meaning on the screen.

The reason for that decision is that it's more convenient for humans.

It's decided by humans, to be convenient for humans.

If you want to give a clear command to display on the screen, you can express it as follows:

With this approach, we can also change the target for moving hello.

If you rewrite it this way, the printer will print Hello.

The grammar of a programming language is simply a defined order of words.

In this chapter, we will explain using an example of a hypothetical programming language.

For example, please see the following example.

Source code

REFLECT HELLOThis program means to display the word HELLO on the screen.

“Replacing the program with Japanese words, it looks like this.”

Source code (Japanese word)

REFLECT HELLONow, Hello will be displayed on the screen.

It consists of only two words, but this is the grammar of a programming language.

Most parts of speech used in programming languages are verbs and objects.

In the previous program, REFLECT was the verb and the string HELLO was the object.

Bringing the verb first is because computers primarily developed in English-speaking countries.

The aforementioned program translates to the following in natural language.

Translate to Japanese.

Display Hello on the screen.

Comparing these two, you can see the simplicity of the programming language's grammar.

| Language | Text |

|---|---|

| Natural language | Display Hello on the screen. |

| Programming Language | REFLECT HELLO |

| Programming language (Japanese term) | REFLECT HELLO |

People unfamiliar with programming languages may question the simple grammar.

However, as mentioned previously, programming languages are languages for controlling computers.

The minimum level of expressiveness needed to communicate instructions to a computer is sufficient.

Among those who saw the program earlier,

You may have noticed the phrase on screen doesn't appear in the program.

This is because when REFLECT is written, it is defined as meaning on the screen.

The reason for that decision is that it's more convenient for humans.

It's decided by humans, to be convenient for humans.

If you want to give a clear command to display on the screen, you can express it as follows:

Source code

REFLECT Screen HELLOWith this approach, we can also change the target for moving hello.

Source code

REFLECT Printer HELLOIf you rewrite it this way, the printer will print Hello.

clear meaning

In the previous section, I explained that the syntax of the programming language is very simple.

There's more to the difference between programming languages and natural languages than that.

The second difference is that programming languages are more precise than natural languages.

There is absolutely no room for ambiguity in the program; every meaning is strictly and clearly defined.

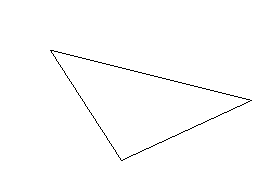

For example, let's consider the example of drawing a triangle.

With human subjects, simply instructing them to draw a triangle might be sufficient.

When working with computers, draw a triangle is too abstract.

You must instruct with a clear and complete procedure that contains absolutely no ambiguity.

First, we must dictate where to begin.

You must give the order with absolute clarity regarding the starting point.

If you were to perfectly specify a location on Earth, you would use latitude and longitude.

This time, as a similar method, we'll instruct it to move right by a certain number of pixels and down by a certain number of pixels from the top-left corner of the screen.

Next, I will instruct you where to draw the line.

If you specify this location to form a triangle, you can draw a triangle.

Here's an example expressed in the English programming language.

This would translate to the following in a Japanese programming language.

Furthermore, it translates to the following in plain Japanese.

Following these steps carefully will produce an image like this.

As mentioned above, a key characteristic of programming languages is instructing through extremely clear procedures.

The instructions must be unambiguous and prescribe every action in detail.

Another example would be, if you wanted to have a robot make you a cup of instant noodles.

Simply telling someone to 'make instant noodles' isn't enough.

One meter forward, turn 90 degrees to the right, extend your arm 10 centimeters forward, ...

by a clear and distinct procedure, from taking out the ramen to filling the kettle with water, lighting the flame, and pouring.

Every action must be meticulously instructed by humans.

Also, for robots (computers),

These actions are just actions, and each one is an independent action.

The robots do not recognize that their actions constitute the steps to make a cup noodle.

I'm just repeatedly performing actions according to given instructions.

While in motion, if it bumps into something and spills the kettle's hot water,

It's just one operation for the computer and not considered a mistake.

The robot continues its programmed actions while holding a kettle without hot water.

Even this might seem very strict and unambiguous compared to dealing with humans,

To be honest, even the procedure outlined here still leaves room for ambiguity.

In fact, even the instruction 'underline' is a very ambiguous instruction.

A computer screen is made up of points, called pixels.

We must instruct every single location where to draw a pixel on the screen.

Here's an example that clearly specifies the position of each pixel needed to draw a triangle.

However, frighteningly, even the command draw a pixel is ambiguous.

Essentially, computers don't know the concept of pixels.

Therefore, we must clearly instruct what it means to draw a pixel.

If the command draw a pixel were made perfectly unambiguous,

This becomes an instruction that is very difficult for humans to understand.

Here, we'll consider a display with a width of 640 pixels as an example.

Calculating, the 32050th point will be located at 50 dots below and 50 dots to the left of the top-left corner of the screen.

In this context, the OOth device refers to the display (specifically, the video card).

Also, set it to zero refers to the color, as zero represents black.

In this way, if we break it down to its most fundamental level, the operation of a computer is...

And furthermore, when I say addition here, I only mean the four cases: 0+0, 0+1, 1+0, and 1+1.

It turns out there are only three.

However, by combining these three, you can perform even the most complex calculations.

However, this is too inconvenient for humans.

Therefore, various features have been added to modern computers to make them more user-friendly for humans.

Therefore, nowadays, programming offers a variety of convenient features as well.

You don't need to think so rigorously and meticulously.

However, even in modern times (2023), there has been absolutely no change in the fundamental workings of computers.

Therefore, all programs are ultimately broken down into very simple calculations.

And computers are simply repeating simple calculations, really (around a trillion times per second).

It's important to remember that a computer is a strict machine when creating a program.

There's more to the difference between programming languages and natural languages than that.

The second difference is that programming languages are more precise than natural languages.

There is absolutely no room for ambiguity in the program; every meaning is strictly and clearly defined.

For example, let's consider the example of drawing a triangle.

With human subjects, simply instructing them to draw a triangle might be sufficient.

When working with computers, draw a triangle is too abstract.

You must instruct with a clear and complete procedure that contains absolutely no ambiguity.

First, we must dictate where to begin.

You must give the order with absolute clarity regarding the starting point.

If you were to perfectly specify a location on Earth, you would use latitude and longitude.

This time, as a similar method, we'll instruct it to move right by a certain number of pixels and down by a certain number of pixels from the top-left corner of the screen.

Next, I will instruct you where to draw the line.

If you specify this location to form a triangle, you can draw a triangle.

Here's an example expressed in the English programming language.

Source code

LINE 50,50 - 250,100

LINE 250,100 - 120,160

LINE 120,160 - 50,50This would translate to the following in a Japanese programming language.

Source code

Line 50,50 From 250,100

Line 250,100 From 120,160

Line 120,160 From 50,50Furthermore, it translates to the following in plain Japanese.

Source code

Starting from a position 50 millimeters to the right and 50 millimeters below the top-left corner of the screen,

Draw a line extending 250 millimeters to the right and 100 millimeters downward.

From a position 250 millimeters to the right and 100 millimeters below the top-left corner of the screen,

Draw a line extending 120 millimeters to the right and 160 millimeters downward.

From a position 120 millimeters to the right and 160 millimeters below the top-left corner of the screen,

Draw a line extending 50 millimeters to the right and 50 millimeters downward.Following these steps carefully will produce an image like this.

As mentioned above, a key characteristic of programming languages is instructing through extremely clear procedures.

The instructions must be unambiguous and prescribe every action in detail.

Another example would be, if you wanted to have a robot make you a cup of instant noodles.

Simply telling someone to 'make instant noodles' isn't enough.

One meter forward, turn 90 degrees to the right, extend your arm 10 centimeters forward, ...

by a clear and distinct procedure, from taking out the ramen to filling the kettle with water, lighting the flame, and pouring.

Every action must be meticulously instructed by humans.

Also, for robots (computers),

These actions are just actions, and each one is an independent action.

The robots do not recognize that their actions constitute the steps to make a cup noodle.

I'm just repeatedly performing actions according to given instructions.

While in motion, if it bumps into something and spills the kettle's hot water,

It's just one operation for the computer and not considered a mistake.

The robot continues its programmed actions while holding a kettle without hot water.

Even this might seem very strict and unambiguous compared to dealing with humans,

To be honest, even the procedure outlined here still leaves room for ambiguity.

In fact, even the instruction 'underline' is a very ambiguous instruction.

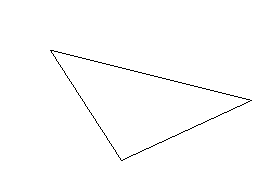

A computer screen is made up of points, called pixels.

We must instruct every single location where to draw a pixel on the screen.

Here's an example that clearly specifies the position of each pixel needed to draw a triangle.

Source code

DOT 50,50

DOT 51,50

DOT 52,50

DOT 53,50

DOT 54,51

DOT 55,51

DOT 56,51

・

・However, frighteningly, even the command draw a pixel is ambiguous.

Essentially, computers don't know the concept of pixels.

Therefore, we must clearly instruct what it means to draw a pixel.

If the command draw a pixel were made perfectly unambiguous,

Perfectly clear instructions.

Set the 32050th value among the numbers stored in the OO-th device connected to the computer to 0.

This becomes an instruction that is very difficult for humans to understand.

Here, we'll consider a display with a width of 640 pixels as an example.

Calculating, the 32050th point will be located at 50 dots below and 50 dots to the left of the top-left corner of the screen.

In this context, the OOth device refers to the display (specifically, the video card).

Also, set it to zero refers to the color, as zero represents black.

In this way, if we break it down to its most fundamental level, the operation of a computer is...

That's all computers can do.

Store a single number. (Storage)

Determine if a single number is zero. (Determination)

Add two numbers. (Operation)

Determine if a single number is zero. (Determination)

Add two numbers. (Operation)

And furthermore, when I say addition here, I only mean the four cases: 0+0, 0+1, 1+0, and 1+1.

It turns out there are only three.

However, by combining these three, you can perform even the most complex calculations.

However, this is too inconvenient for humans.

Therefore, various features have been added to modern computers to make them more user-friendly for humans.

Therefore, nowadays, programming offers a variety of convenient features as well.

You don't need to think so rigorously and meticulously.

However, even in modern times (2023), there has been absolutely no change in the fundamental workings of computers.

Therefore, all programs are ultimately broken down into very simple calculations.

And computers are simply repeating simple calculations, really (around a trillion times per second).

It's important to remember that a computer is a strict machine when creating a program.

Everything is calculation.

As we saw a bit earlier at the end of the previous section,

The biggest difference between programming languages and natural languages lies in their purpose.

The third difference is that programming languages are designed for computation.

In computers, everything is numbers, and no non-numerical concepts exist.

As with drawing the triangle in the previous section, the values were also specified.

Everything handled in programming languages is ultimately numbers.

In essence, a programming language is a computational language.

However, as explained in the previous section, a computer can only perform addition.

Depending on the combinations of addition, more complex calculations, including addition, subtraction, multiplication, and division, can be performed.

Ultimately, computers can only perform calculations to a certain extent.

But it might feel not much different from a calculator, since it can only perform basic arithmetic operations.

Computers have the ability to perform calculations in the order specified by humans.

A programming language is a language for specifying the order of calculations.

Modern computers combine calculations involving the order of operations (addition, subtraction, multiplication, and division) in incredibly complex ways.

because it offers functionality that can be intuitively operated with a mouse,

I find it difficult to feel like I'm really dealing with numbers.

However, when learning a programming language, being keenly aware of numbers can...

I think even grammar that seems difficult at first will become clear.

The biggest difference between programming languages and natural languages lies in their purpose.

The third difference is that programming languages are designed for computation.

In computers, everything is numbers, and no non-numerical concepts exist.

As with drawing the triangle in the previous section, the values were also specified.

Everything handled in programming languages is ultimately numbers.

In essence, a programming language is a computational language.

However, as explained in the previous section, a computer can only perform addition.

Depending on the combinations of addition, more complex calculations, including addition, subtraction, multiplication, and division, can be performed.

Ultimately, computers can only perform calculations to a certain extent.

But it might feel not much different from a calculator, since it can only perform basic arithmetic operations.

Computers have the ability to perform calculations in the order specified by humans.

A programming language is a language for specifying the order of calculations.

Modern computers combine calculations involving the order of operations (addition, subtraction, multiplication, and division) in incredibly complex ways.

because it offers functionality that can be intuitively operated with a mouse,

I find it difficult to feel like I'm really dealing with numbers.

However, when learning a programming language, being keenly aware of numbers can...

I think even grammar that seems difficult at first will become clear.

About This Site

Learning C language through suffering (Kushi C) isThis is the definitive introduction to the C language.

It systematically explains the basic functions of the C language.

The quality is equal to or higher than commercially available books.